What is YOLO?

YOLO, which stands for "You Only Look Once," is a popular object detection model that revolutionized how computers identify and locate objects in images. Introduced in 2015 by Joseph Redmon and colleagues, YOLOv1 was the first version of this model, designed to be fast and efficient by processing an entire image in a single pass, unlike earlier methods that scanned images multiple times.

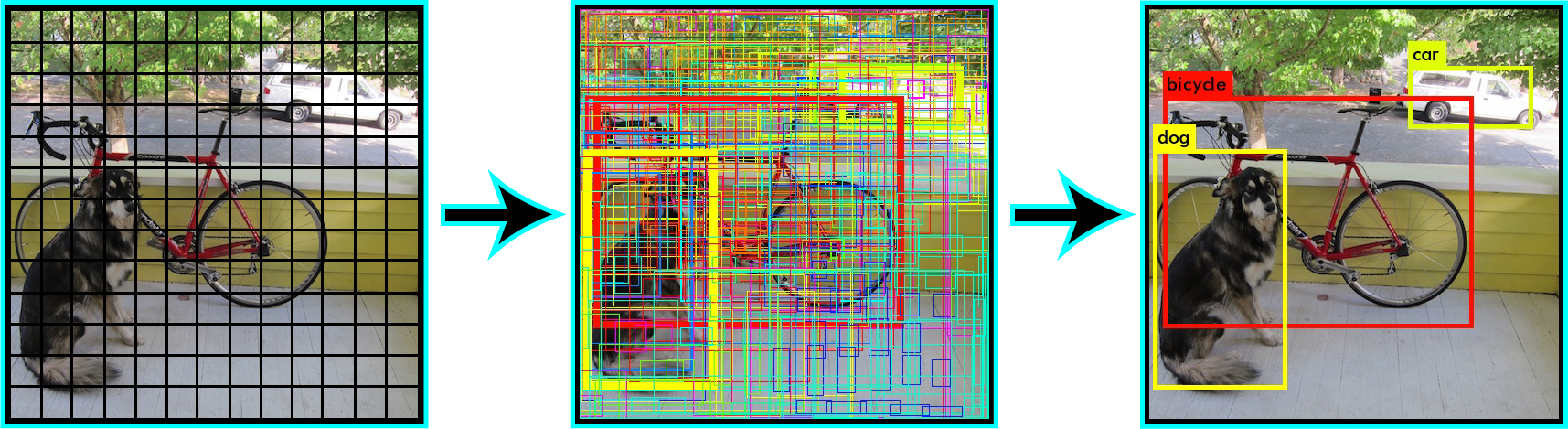

For beginners, think of YOLOv1 as a smart camera that can look at a picture and instantly tell you what objects are in it (e.g., a dog, a car) and where they are located, all in one quick step.

Figure 1: YOLO detecting objects in an image, identifying and localizing objects like cars and bi-cycle and dog. reference

How Does YOLOv1 Work?

YOLOv1 treats object detection as a single regression problem. Instead of analyzing an image piece by piece, it divides the image into a grid and predicts:

- Bounding boxes: Rectangular boxes around detected objects, defined by coordinates \((x, y, w, h)\), where \((x, y)\) is the center, and \(w\) and \(h\) are the width and height.

- Class probabilities: The likelihood that an object belongs to a specific class (e.g., "dog" or "cat").

- Confidence scores: How confident the model is that a box contains an object and how accurate the box is.

The image is divided into an \(S \times S\) grid (in YOLOv1, \(S = 7\)). Each grid cell predicts \(B\) bounding boxes (typically \(B = 2\)) and their confidence scores, along with class probabilities for \(C\) classes. The output is a tensor of shape \(S \times S \times (B \cdot 5 + C)\), where 5 accounts for the box coordinates and confidence score.

Equation 1: YOLOv1 output tensor structure, where \(S\) is the grid size, \(B\) is the number of bounding boxes per cell, and \(C\) is the number of classes.

YOLOv1 Architecture

The YOLOv1 model is built using a convolutional neural network (CNN) inspired by GoogLeNet. It has 24 convolutional layers for feature extraction, followed by 2 fully connected layers to predict bounding boxes and class probabilities. The input image is resized to \(448 \times 448\) pixels, and the network processes it to produce predictions in one forward pass.

Figure 2: YOLOv1 architecture, showing convolutional layers followed by fully connected layers for object detection. reference

The architecture can be summarized as:

- Convolutional Layers: Extract features like edges, shapes, and textures from the image.

- Max-Pooling Layers: Reduce spatial dimensions to make the model faster and less prone to overfitting.

- Fully Connected Layers: Combine features to predict bounding boxes and class probabilities.

Loss Function in YOLOv1

YOLOv1 uses a custom loss function to optimize its predictions. The loss function balances three components:

- Localization Loss: Penalizes errors in bounding box coordinates (\(x, y, w, h\)).

- Confidence Loss: Ensures accurate confidence scores for boxes with and without objects.

- Classification Loss: Penalizes incorrect class predictions.

The loss function can be expressed as:

Equation 2: Simplified YOLOv1 loss function (localization component), where \(\lambda_{\text{coord}}\) weights the localization loss, and \(\mathbb{1}_{ij}^{\text{obj}}\) indicates if an object exists in the box.

This loss ensures the model learns to predict accurate boxes and classes while being computationally efficient.

Advantages and Limitations of YOLOv1

Advantages:

- Fast: Processes images in a single pass, making it real-time capable.

- Global Context: Considers the entire image, reducing false positives.

- Simple: Easy to implement and understand compared to multi-stage detectors.

Limitations:

- Struggles with small objects due to the coarse \(7 \times 7\) grid.

- Localization errors: Bounding box predictions can be less accurate than other models.

- Limited boxes per grid cell: Only predicts two boxes per cell, which can miss overlapping objects.

Conclusion

YOLOv1 is a groundbreaking model that made object detection fast and accessible. While newer versions like YOLOv8 have improved accuracy and capabilities, YOLOv1 remains a great starting point for beginners due to its simplicity. Understanding its grid-based approach and CNN architecture lays the foundation for exploring more advanced object detection models.